Skip to content

Sinking logs to BigQuery

I wanted to know how many times cloud functions are getting triggered in my project.

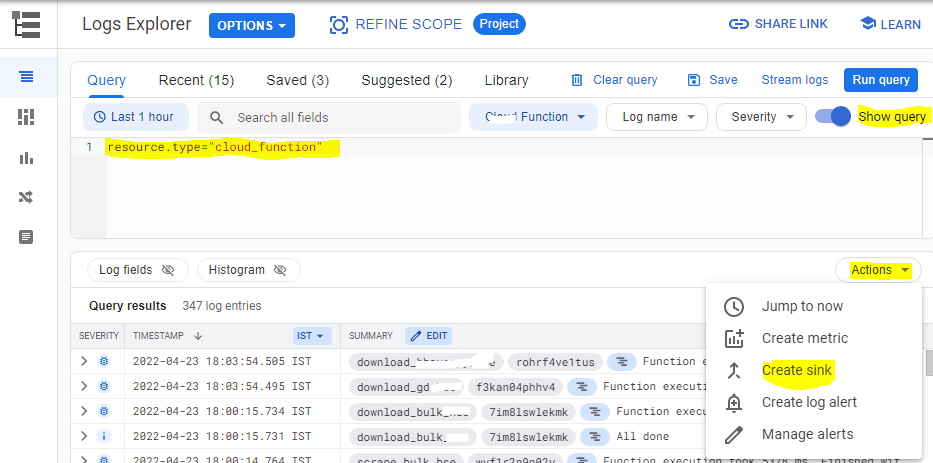

Go to Google Cloud Console and open the Logs explorer

Click Show query. By default it showed the below logging query

1resource.type="cloud_function" 2resource.labels.function_name="test_function1" 3resource.labels.region="asia-south1"Since i was to transfer all the logs related to Cloud Functions, i keep only the below condition

1resource.type="cloud_function"- Click More Actions or Actions and select Create Sink

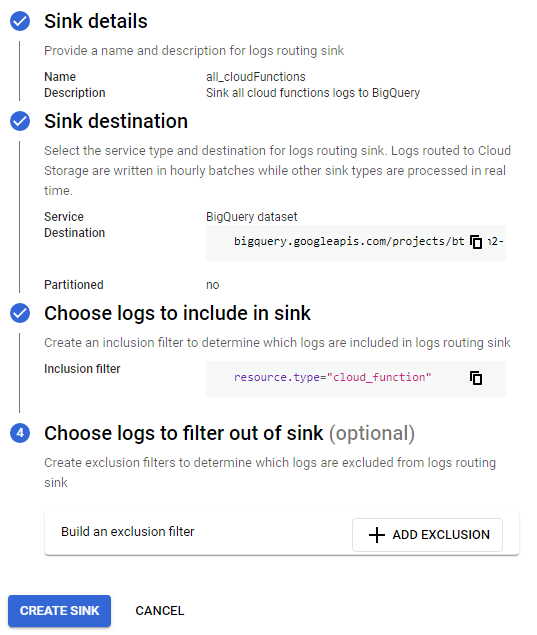

- It takes to Logging -> Logs Router. Here i fill following information

Click Create Sink

Go to bigQuery and execute a sample query like below to know how many times Cloud Functions were triggered in a day

1select count(resource.labels.function_name) as invocation_total, 2resource.labels.function_name, 3resource.labels.region4from `<<project-name>>.gcp_logging.cloudfunctions_googleapis_com_cloud_functions_20220423`5where textPayload = 'Function execution started' 6group by resource.labels.region, resource.labels.function_nameNote: There will be one new table each day.