Notes from GSE UK Conference 2021

— db2-notes, mainframe — 36 min read

- 2AD - The joy of joins

- 6AD - Mainframe Hardware 101

- 2AE - Intro to zOS

- 2AH - Squeeze Your Data with Db2A History of compression

- 5AK - zOS Container Extensions Part 1 : An Introduction to Running Containers Inside zOS

- 7AM - APPLCOMPAT, NULLID and Me

- 2AO - How does Db2 z transform your SQL query for better performance

- 2AP - Db2 12 for zos and Asynchronous Systems Managed CF Structure Duplexing Update

- 2AR - ISPF Master Class - Edit from A to ZLAST

- 6AT - zOS Terminology

- 4AU - Db2 zOS housekeeping best practices

- 6AU - Mainframe Performance Tuning

- 5AV - IBM zOS on z15 Hardware

- 6AV - How long is too long Multi-period Service Classes

- 2AY - Db2 Clients, Connect, gateways - what do I really need

- 2BB - 2021 Edition - Db2 for zOS Development - SWAT Tales

- 6BB - zOS program fetch, how programs are found for execution

- 5BC - Protecting data through encryption of Coupling Facility Structures

- 7BE - Automating DB2 Schema Change - A Practical Guide

- 4BI - Db2 for z/OS Continous Delivery - Five Years Later

- 4BJ - Parallel Sysplex Basics

2AD - The joy of joins

Speaker: Emil Kotrc

Source : Slides

Left outer join

- Driver table is on the left side

- NULL supplying table is on the right side

Right outer join

- Driver table is on the right side

- NULL supplying table is on the left side

There is no performance difference between left and right as Db2 z/OS will change do right as left

Full outer join

- Driver and NULL supplying tables on both sides

Outer joins and filtering conditions

1-- Query 1 : Filtering data from resultset2SELECT d.deptno, d.deptname, e.lastname3FROM dept d LEFT JOIN emp e4ON d.deptno = e.workdept5WHERE d.deptno like 'D%'6-- Note : Filtering in WHERE clause happens first and then join7

8-- Query 2 : Filtering rows to join(does not filter data from result set)9SELECT d.deptno, d.deptname, e.lastname10FROM dept d LEFT JOIN emp e11ON d.deptno = e.workdept12AND d.deptno like 'D%'13-- Note : Here it joins only department starts with 'D%' and other rows are retreived as its LEFT JOIN.Lateral Join

1-- Shows Top 3 salaries in each department2SELECT workdept, deptname, lastname, salary 3FROM dept d, 4TABLE(SELECT workdept, lastname, salary FROM emp e5 WHERE e.workdept = d.deptno6 ORDER BY salary DESC7 FETCH FIRST 3 ROWS ONLY)8ORDER BY deptno, salary DESC, lastname;By adding TABLE keyword(also known as lateral correlation) Db2 allows a join of a table or a nested table expression, which can have a correlated reference to the previous(left) table, not just a reference to a table from a higher level. This is called lateral join.

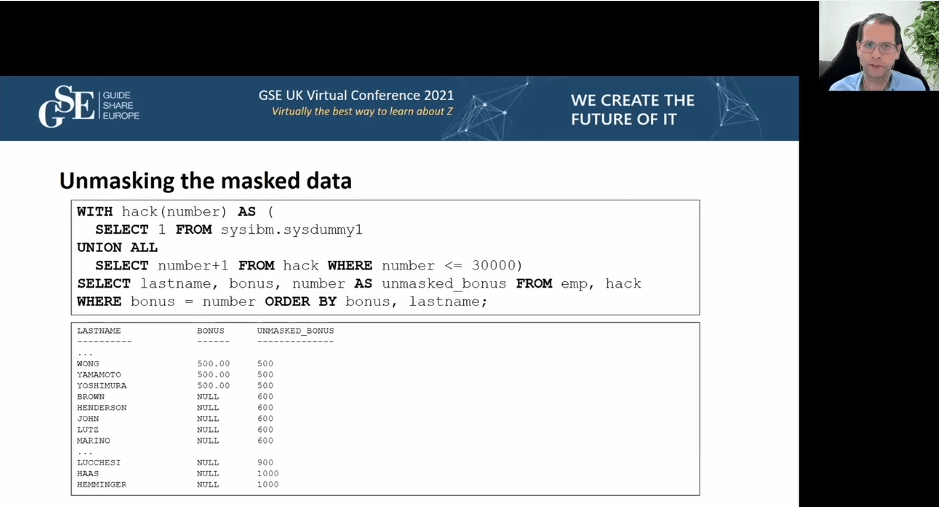

Masking Data

Masking bonus column

1CREATE MASK bonus_mask ON emp2FOR COLUMN bonus3RETURN4 CASE5 WHEN (bonus > 500.00) THEN NULL6 ELSE bonus7 END8ENALBE;9

10ALTER TABLE emp ACTIVATE COLUMN ACCESS CONTROL;

Mask is not encryption

Overview of Join Methods

Nested loop join

- The inner table is scanned repeatedly

- As many times as qualifying rows from the outer table

- Used when

- The outer table is small or the number of qualifying rows is small

- Highly clustered index exists on the join columns of the inner table

- Or Db2 can create a sparse index on the inner table

- No join columns exist(Cartesian join, cross join)

- Db2 can sort the outer table under certain conditions

- Join columns are not in the same sequence

- Join columns of the outer table has no index

- The index is poorly clustered

- Sparse Index (Db2 z/OS)

- A sparse index is an index pointing to a range of n contiguous rows rather than a specific RID

- Hasing support since Db2 10

- Access to inner table is more efficient when there is no efficient index

- A sort of the outer table is avoided

Merge scan join

- Merge join or sort merge join

- Requires one or more equi predicates

- Db2 scans both tables in order of the join columns

- Db2 may sort the tables

- The inner table is put into the work file

- The outer only if sorted

- When a row from outer matches a row from inner, a combined row is returned.

- Continue with another row from inner

- When a match is no longer found, Db2 reads another row of the outer

- If a unmatched row is found

- It is discarded for an inner join

- It is discarded if it is a left join and it comes from inner table

- It is kept if it is a left join and it comes from an outer table

- It is kept for full outer join

- Used when

- One or more equi predicates

- Many to many join

- Large tables with no indexes with matching columns

- Few columns are selected on inner tables

- This is the case when a Db2 sort is used

- The fewer the columns to be sorted, the more efficient the sort is

Hybrid join

- Applies only to inner join

- Requires an index on the join column on the inner table

- Db2 scans the outer table

- Joins the outer table with record IDs(RIDs) from index on the inner(phase 1)

- Db2 sorts the data in outer table and the RIDs(phase 2)

- Db2 retrieves data from the inner table using list prefetch

- Db2 combines the data from inner and from phase 2

- Used when

- A non-clustered index or indexes are used on the join columns of the inner table

- The outer table has duplicate qualifying rows

Hash join

- Requires one or more equi predicates

- Build phase - Db2 reads the inner table and builds an in memory hash table

- For qualified rows - each key is hashed and stored in the hash table with the row

- Db2 maintains a chain of collisions

- Probe phase - Db2 reads the outer table

- The key is hashed and searched in the hash table

- Db2 for z/OS equivalent

- Nested loop join with sparse index and hashing

Star join

- Join tables from a star schema

- A fact table with a number of dimension tables

- Star schema involves three or more tables

- Db2 joins dimension tables to the fact table according to the multi-coumn index that is defined on the fact table

- requires a single index on the fact table that supports the filtering that is provided by the dimension tables of the start schema

6AD - Mainframe Hardware 101

Speaker: Lena Rösch

Source : Slides

2AE - Intro to zOS

Speaker: Steve Warren

Source : Slides

Mainframe was named z/OS in 2001 and before that it was called OS/390(1996).

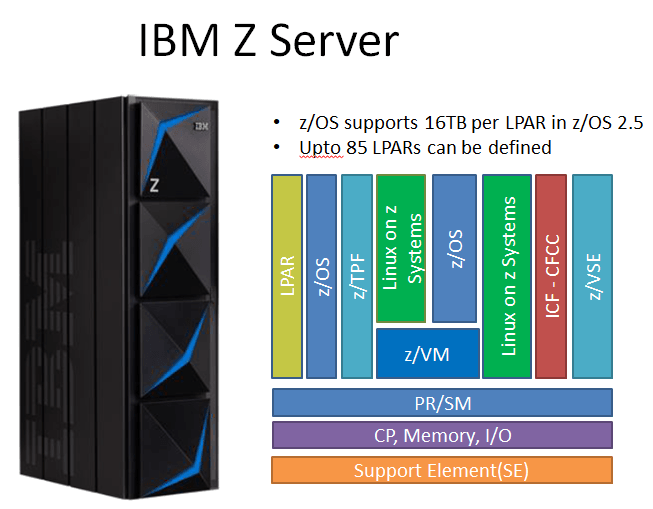

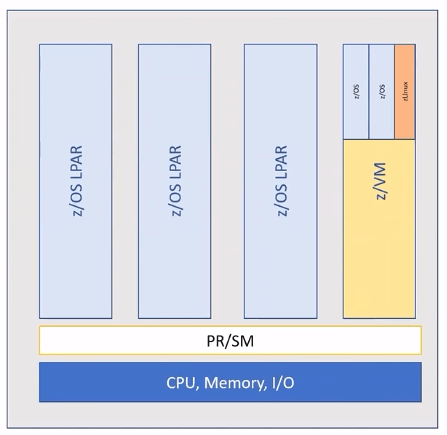

Each mainframe box is called as a CPC(central processor complex) that includes main storage, one or more central processors, timers, and channels.

Each CPC can have upto 85 LPARS(Logical Partitions) meaning upto 85 different instances of OS can be running concurrently on one box and those OS can have their own dedicated memory, processors and shares resources and can have dedicated I/O. Each LPAR can have 16TB of memory in z/OS 2.5.

Z/OS is a collection of elements and base elements are always included in the z/OS as they deliver essential OS functions. Other base elements are,

- Communication Server, z/OSMF, TSO, ISPF, JES2

- There are optional features which are installed in addition to base elements like SDSF, RMF, JES3, DFSMShsm, DFSMSdss

z/OS Software Stack

- Operating System : Provides critical set of system services made possible by the z/Architecture and firmware. Contains base elements and optional features.

- Middleware : Software that is neither operating system code nor end-user application. Some middlewares are Db2, CICS, IMS, WAS, MQ, SAP(Systems, Applications and Products in Data Processing), TSO/E

- Applications : Provide end-user interface to product solutions. Like ISPF, CICS Applications.

Storage and Files

DASD - Direct Access Storage Device

- Usually storages are kept in a separate box and they called as DASD Devices

- DASD are Hard Drives and they are made up of volumes and volumes need serial number(VOLSER) which is made up to 1 to 6 characters

- Multiple DASD volumes are attached to mainframe operating system

Dataset

- Files are stored in preallocated areas on the hard disk. In mainframe you have to create space in advance.

- Dataset name is made up of 1 to 22 segments(21 dots) and each segment separated by a period(.) and limited to 8 characters. First segment is called the High-level qualifier and last segment is called the Low-level qualifier.

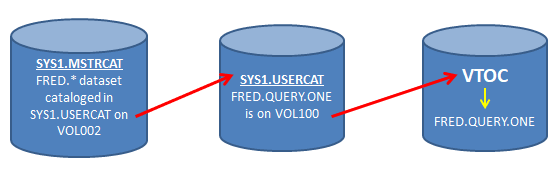

VTOC & Catalog

- Volume Table of Contents(VTOC), each DASD volume has a VTOC that locates a dataset on the volume.

- User catalog contains name and location(VOLSER) information

- There can be thousands of user catalog, to know which catalog contains this information, we have Master catalog. It will basically say, any dataset that starts with this HLQ can be found in this User catalog.

TSO/E

- Time Sharing Option/Extension allows users to log on and interactively share resources

ISPF

- Interactive System Productivity Facility

- Its a hierarchical view of panels(Mainframe UI).

- Using ISPF one can create datasets, write program, submit jobs and check logs at various screens.

z/OS Unix

- Unix files are not stored in datsets, they use zSeries File System. Similar to a normal unix system but it runs on z/OS.

- Filenames can be upto 1023 characters and its case-sensitive

- There are multiple ways to access files in z/OS environments.

- iSHELL -

TSO ISHELL - z/OS Unix Directory List Utility - 3.17 in ISPF

- OMVS -

TSO OMVS- useoedit filenameto edit file. - Remote unix interfaces - Telnet, SSH, NFS. With NFS, you can mount unix directories on windows drive using

mvslogin. Slide 102

- iSHELL -

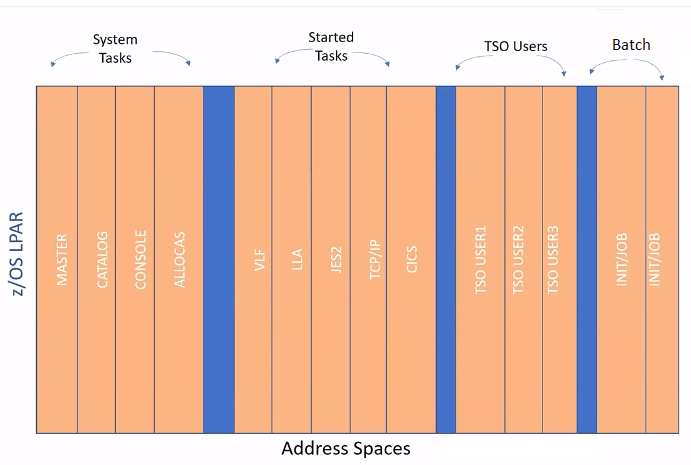

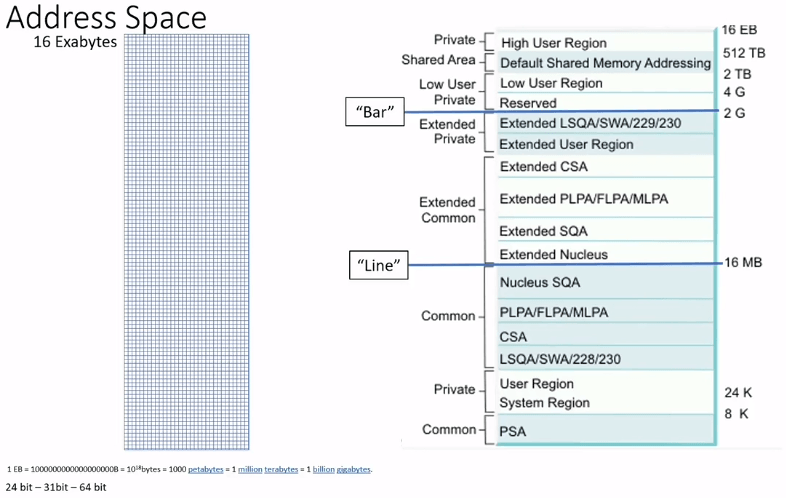

Address Spaces

- Address spaces are range of addresses in virtual storage

- Each user gets an address space containing the same range of addresses as any other user. This give you addressing range where you can load applications and application can load data to process. Each user gets 0-16EB(Exabytes) of addressability.

- z/Os creates address spaces for some of its interal components(~35 address spaces). Eg: Master, PCAuth, RASP, Trace, DumpSrv, XCFAS, GRS....

- In addition there are lots of other address spaces for middleware and applications created like SDSF, VTAM, TCAS, TCPIP...

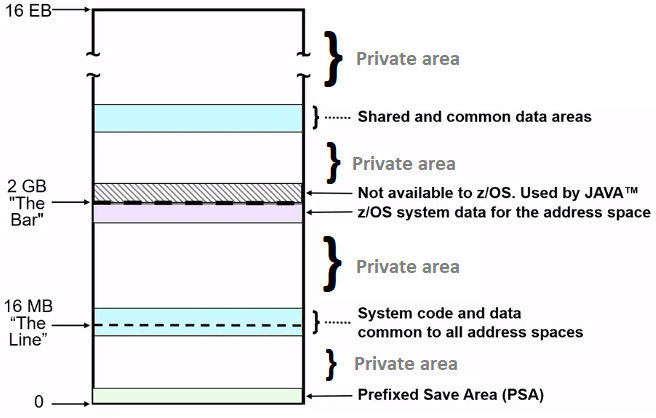

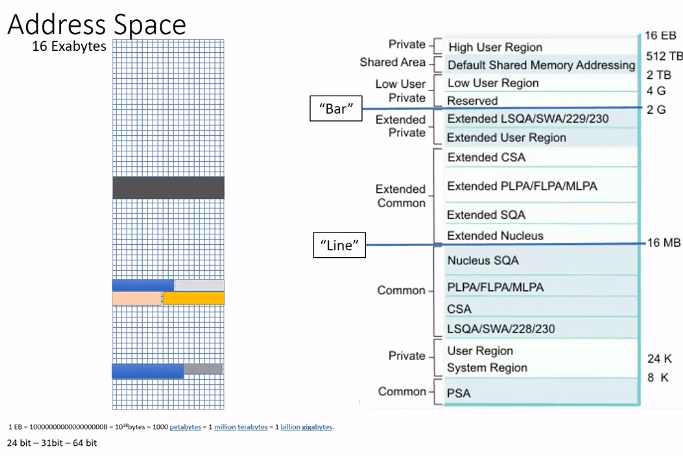

What makes up an Address Space

z/OS has storage ranges that contain code/data that is common to all address spaces. i.e., all applications are referencing same piece of storage regardless of which address space you are in like certain functions of OS need to accessible to all address spaces, certain storage need to be accessible by all address spaces.

Whats left is the Private Area that is available for your use. This areas, normally not accessible by other address spaces. But there are programmable interfaces that allow other applications running in other address spaces to access storage in different address spaces. Your programs will be loaded in this private area in your address space.

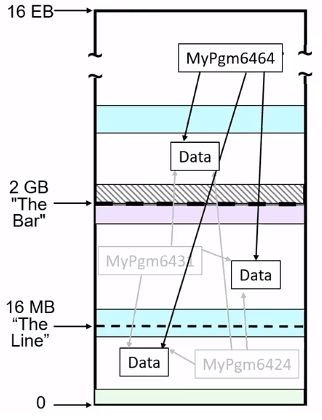

How much memory can your program can address

An address space size is limited to the amount of memory that can be addressed.

- In 1977, an address space was limited to 16MB

- In 1981, an address space size was increased to 2GB. This is when concept of The Line was introduced.

- Programs not changed to support 31-bit addressing had to run and could only access data that was "Below the line"

- Programs that were changed to support 31-bit addressing could run and could also access data that was "Above the line".

- In 2000, an address space was increased to 64-bit or 16EB addressing. During then introduced "The Bar".

Now we needed a way to indicate which addressing mode the program supported.

AMODE : Addressing mode

- 24 : Applications can only address data that is below the line

- 31 : Applications can address data below or above the line

RMODE : Residency mode - Since AMODE 31 programs could be run/loaded above or below the line a way was needed to request where the program should reside.

| AMODE | RMODE | Description |

|---|---|---|

| 24 | 24 | Program can access data below the line and program is loaded below the line |

| 31 | 31 | Program is loaded above the line and can access data that is in BTL or ATL |

| 31 | 24 | Program resides BTL and can access data that is BTL or ATL |

| 24 | 31 | No possible as Branches within program would fail since the target location is in 31-bit storage but the address being used is a 24-bit address. Basically cant have AMODE < RMODE. |

- AMODE 64 was introduced when 64Bit was introduced and data can be accessed above the bar.

Virtual Storage

The amount of memory required by all the running tasks is usually much greater than the amount of real storage available. This brings the concept of Virtual Memory.

Every OS has concepts of Swap disk, swap files.. z/OS is the first OS to have this concept implemented.

When a program is executing, it needs to be in real memory as when processor is executing instructions, it expects the instructions to be in real memory. But when they are not running, they can be in Auxiliary Storage.

Idea of Virtual Storage involves, knowing when bring into real storage and when it can be in auxiliary storage.

Virtual, Real and Auxiliary is divided into 4K Chunks.

- 4KB pieces of Virtual Storage is called pages.

- 4KB pieces of Real Storage is called frames.

- 4KB pieces of Auxiliary storage is called slots.

Dynamic Address Translation - OS converting virtual addresses into real addresses. When a program is running, the processor must be able to locate its virtual pages in real storage.

Batch Processing

- Batch processing involves jobs running without user interaction.

- In JCL, you say where is the program load located, wheres the input to the application program and where is the output is going to go.

- When a JCL is submitted, it is received in the JES(Job Entry System), queues job waiting to be executed, manages priority, schedules processing and controls output processing.

- An initiator system program, processes the JCL, creates an environment in an initiator address space and run the job in that address space.

IPL

- Initial Program Load a.k.a booting the z/OS system.

- Special console is used which is connected to network of mainframe boxes or Harware Management Console(HMC) via Browser where we load Operating System of a particular partition thats going to start the boot of the operating system(z/OS). Load profile knows the device address and its going to actually kickoff the boot of the IPL.

- Operating System is on the special volume called the System Resident Volume(SYSRES or SYSRS1)

- IPL is processed in phases and each phase builds on previous. First phase is just where the OS is located, device number of the SYSRES.

- Hardware IPL

- IPL RIMs (Resource Initialization Modules)

- NIP RIMs

- MSI : Master Scheduler Initialization. In this phase server important address spaces are created like SMF, System Logger, BCPii(Base Control Program internal interface)

- JES

- Then RACF, ICSF, VTAM, TCAS, TCP/IP(with this starting, IPL is considered complete)

- After this lot of middleware(Db2, CICS, WAS, IMS..) and application needs to be started

2AH - Squeeze Your Data with Db2A History of compression

Speaker: Jim Dee

Source : Slides

Tablespace Compression

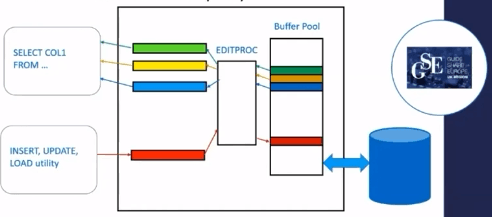

In the beginning, DBAs were using EDITPROC utility to save disk space which is a exit routine.

- Idea is to compress each row before you put them in the bufferpool and expand when it comes out of the bufferpool.

In Db2 V3, IBM came with compression on engine itself and they exploited a hardware instruction(CMPSC), its an expensive instruction but its very cheaper when compare to writing compression softwares.

CMPSC depends on a dictionary which depends on strings found in the table, it implements Lempel-Ziv algorithm. Dictionary is created during load or reorg utility. Copy of the dictionary is stored in disk as part of table.

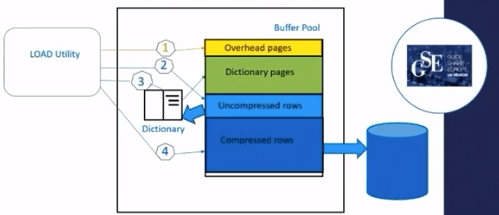

Load utilty compressing pages

Steps are,

Overhead pages : Load utility write images of each page of the table to the end of bufferpool. They are very important, but has nothing to do with compression.

Uncompressed rows : Load writes uncompressed rows to first data pages of the table as its doing that its building the dictionary in memory.

Dictionary pages : When enough dictionary is built for compression to use. Dictionary pages are added before the data pages in the structure

Compressed rows : Load utility uses the dictionary to compress the rows.

Above diagram could represent a partition or partition of PBR but each partition of a PBG has its own dictionary but it should look similar.

Use DSN1COMP to analyze compression

- Recommended to run against a imagecopy

Db2 uses row level hardware compression. Each row is decompressed before passed from bufferpool. All SQL sees is decompressed data.

Copies and logs are compressed

Application performing random access retreiving less rows are effective than sequential access as they have to decompress less number of rows.

In Db2 10, IBM added Auto Compression. Can now build dictionary without Load or Reorg.

In Db2 12, FL504, IBM added Huffman compression

In FL509, IBM added Huffman compression at object level( can mention in DDL -

COMPRESS HUFFMAN)- With HUFFMAN, CPU usage might be higher but disk savings should be greater.

Index Compression

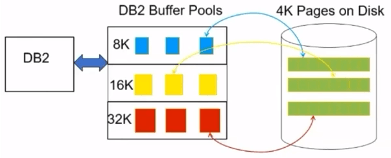

Compressed on disk and other times you are seeing the expanded page in bufferpool.

Only leaf pages are compressed

Compression is done by software.

4K on disk but 8/16/32K in bufferpool.

If you are going read one key from a page, entire page must be decompressed.

Db2 uses a special 4k buffer which is page-fixed in Db2 memory and its not a bufferpools.

- An Index Page from BP is compressed into a 4K image(special buffer) and written to disk(usually multiple pages are written)

- When reading, 4k page is read from disk into special buffer and then expanded into bufferpool.

Here sequential access performs better than random access. Complete opposite to tablespace compression.

In Db2 12, FL 500, IBM added online ALTER INDEX COMPRESS YES. Index doesn't go offline(rebuild pending). Now its in advisory and index will be available.

LOB Compression

- Available from Db2 12 M500

- Compression/Expansion at the time of I/O. Only compressed in Disk.

- LOB Compression exploits cache processor z/EDC

- There is a no separate dictionary pages, the dictionary is stored in compression string in the LOB itself.

5AK - zOS Container Extensions Part 1 : An Introduction to Running Containers Inside zOS

Speaker: Steve Warren

Source : Part 1 Slide, Part 2 Slide

Expanding z/OS Software Ecosystem

Traditionally z/OS was all about running OLTP applications using middleware products such as CICS, Db2, MQ and they all have high availability, high throughput transactions on the system. Common languages found in z/OS Assembler, COBOL, PL1 and Batch programs which were run in middleware infrastructures.

Around 25yrs back, Unix System Services were introduced(TSO OMVS) and z/OS is a certified Unix Operating System to this day. With this it was easy to port unix applications to z/OS and recompile and run it on z/Os Unix and this led to running C++, Java and WebSphere application in z/OS Unix. Now, we were able to run z/OSMF, Spark and Zowe applications on z/OS Unix.

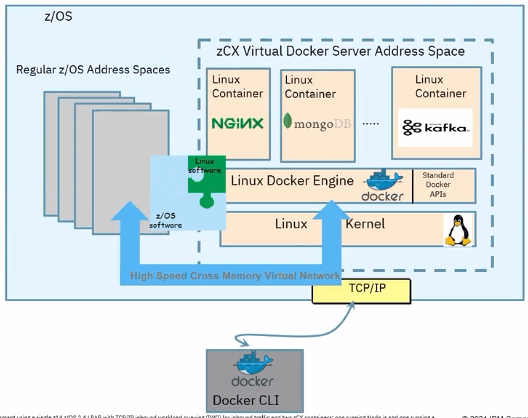

Next evolution in z/OS Unix is z/OS Container Extensions(zCX). With this, we can take Linux on Z Binaries and deploy them directly in z/OS as docker containers. So now, we can run mongoDB, Kafka, NGINX, Spark, Maven and Redis in z. Requires z14 or later.

Applications which are running in linux and interacting with z/Os anyway those are the workloads which could be run in zCX. What those applications get out of the box is, improved network transaction rates by 81% and reduced latency by 45%.

IBM provides a turnkey solution,

- IBM has pre-packaged number of items inside the zCX server that enable you to get up and running right away. No need to procure linux kernal or docker engine.

- Provisioned using z/OSMF workflows

- IBM provides regular service to these appliances.

- zCX workloads are zIIP eligible

Usecases of zCX

- Microservice - logstash, Etcd, Wordpress, etc.

- No-SQL - MongoDB, IBM Cloudant, etc.

- Analytics frameworks - z/OS Spark, TensorFlow

- Messaging Frameworks - IBM MQ, Kafka

- App Connect Enterprise

- Web server proxies

- Emerging programing languages

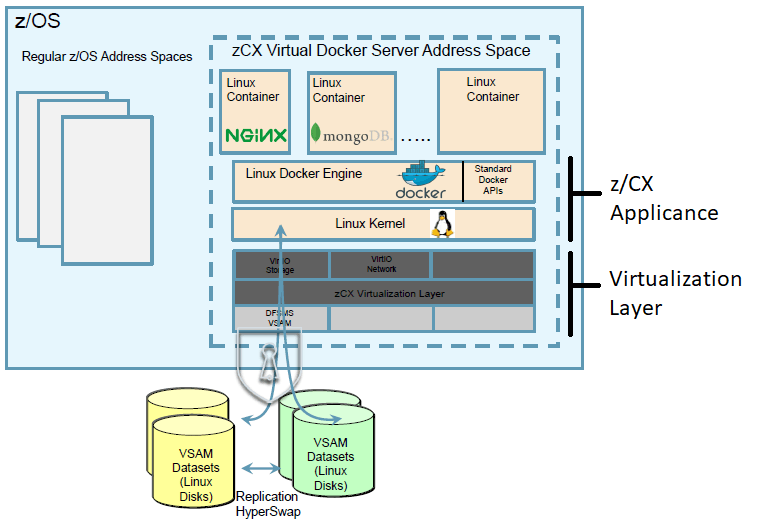

IBM zCX - z/OS Storage Integration

- IBM did not modify Linux Kernel to leverage z/OS technologies

- What IBM did is ? zCX developers used a published interface that interacts with Storage and Networking called VirtIO devices. They are like device drivers.

- Suppose from zCX you write to data, its going go to VirtIO Storage to zCX Virtualization Layer then to DFSMS VSAM to update.

Virtualization Layer is not provisioned as part of zCX address space but its a part of z/OS Base in BCP Base. So you can get upgrades to this part alone. This manages the storage, network and console access.

zCX Applicance contains the Linux Kernel, Docker Engine and zOSMF Workflows. zCX Applicance code is deployed into a separate Address Space, Virtualization Layer code is not part of this zCX address space.

zCX - CPU, Memory, Storage and Workload Management

- Each zCX server is provisioned as a Address space and each address space has specific assigned storage, network and memory resources.

- Those server needs their own memory(fixed) above 2GB bar. When you page-fix memory that basically takes that memory off the table for other applications to use. That memory is not shared with other applications.

- Shares CPU resources with other address spaces but can influence resource access via configuration and WLM policies.

- zCX requires you to specify how many virtual CPUs it can use. Each virtual CPU is a dispatchable thread(ie., These CPUs translate into MVS TCB.). zCX utilizes zIIP CPU via MVS dispatcher. The dispatcher will dispatch these tasks on the available zIIPs or general purpose CPUs based on WLM priorities.

- All memory is backed by pages(4k, 1M and 2G) in virtual storage. Bigger the page, the less z/OS overhead(2G fixed pages are for best performance).

7AM - APPLCOMPAT, NULLID and Me

Speaker: Rob Gould and Gareth Copplestone-Jones

Managing APPLCOMPAT

Introduced in Db2 11 - Objective was to avoid the need to modify application programs when migrating a Db2 system to a new release. This was a way to ignore pre-reqs before migrating to new version.

In static SQLs, in the BIND PACKAGE command you can mention.

- APPLCOMPAT(V12R1M505)

- APPLCOMPAT(V12R1M100)

- APPLCOMPAT(V12R1M503)

- If not specified, it will use APPLCOMPAT zParm value.

In Dynamic DBA and Developer Packages, you can mention special register

SET CURRENT APPLICATION COMPATIBILITY='V12RM503'- In Db2 12, CURRENT APPLICATION COMPATIBILITY must be <= the BIND APPLCOMPATIBILITY setting.

In Generic Dynamic SQL Packags e.g., NULLID. Which packages does it pick, thats where it get quite messy.

Note : IFCIDs to capture and report application incompatibilities(366,376)

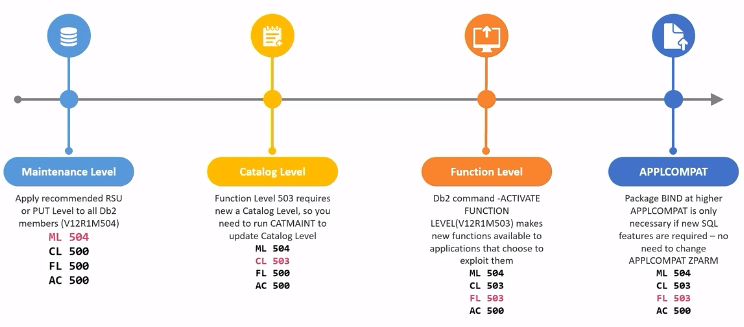

Continous Delivery

Four levels are associated with continous delivery

Maintenance level - lifted by applying maintenance which contains defect fixes and enchancements, also known as (Db2) code level.

Catalog level - vehicle to enable new Function Level, needed for some Function Levels

Function level - needs to be activated, introduces new Db2 for z/OS features and functionality, with no impact on existing application behaviour.

APPLCOMPAT - set at the package level, providing an "island of stability" for a given application

- Determines SQL function level of application

- APPLCOMPAT attribute must be <= Function Level

- APPLCOMPAT must be advanced via BIND/REBIND to exploit new SQL function

Exploiting New SQL Function

When to BIND/REBIND with a higher APPLCOMPAT ?

- Once you eliminate all the application incompatibilities for a given package.

- You plan to exploit new functionality

- When IBM has withdrawn support of the APPLCOMPAT level.

APPLCOMPAT and NULLID packages

- NULLID : They are shared, generic packages used by client applications(JDBC, ODBC, CLI, .Net) accessing Db2 via DRDA over TCP/IP. Package names are,

- SYSSHxyy : S indicates small package like small cursor size and H indicates CURSOR WITH HOLD. x indicates isolation levels like 1(UR), 2(CS), 3(RS) and 4(RR). yy is just sequential numbers between 00 - FF.

- SYSSNxyy : Here N indicates you are using NOT WITH HOLD

- SYSLHxyy : Here L indicates Large package

- SYSLNxyy :

Multiple collections for NULLID packages are already used by some sites for managing KEEPDYNAMIC attribute and for High Performance DBATS

- Use meaningful names for collections like NULLID_V12R1M504

- Usage: BIND PACKAGE(NULLID_V12R1M504) COPY(NULLID.SYSLN201) ACTION(ADD) APPLCOMPAT(V12R1M504)

- Specify collection/package set via CURRENT PACKAGESET or CURRENT PACKAGE PATH special registers. Do remmeber CURRENT PACKAGE PATH takes precedence if both specified.

Client-side configuration

- Specify CURRENT PACKAGE PATH / CURRENT PACKAGE SET via:

currentPackageSet/currentPackagePathJDBC propertiesCurrentPackageSet/CurrentPackagePathCLI/ODBC properties

- Can also be specified in connection string

- Cons being may application to configure

- Specify CURRENT PACKAGE PATH / CURRENT PACKAGE SET via:

Server-side configuration

- Functionality of CURRENT PACKAGE PATH at server side is achived by using System Profile Monitoring. We have two profile tables(not a catalog table).

- SYSIBM.DSN_PROFILE_TABLE : defines filtering scopes, who or what is being monitored.

- SYSIBM.DSN_PROFILE_ATTRIBUTES : defines the actions applied to whats being monitored.

- This be started and stopped by

-START PROFILEand-STOP PROFILEcommands.

- Functionality of CURRENT PACKAGE PATH at server side is achived by using System Profile Monitoring. We have two profile tables(not a catalog table).

2AO - How does Db2 z transform your SQL query for better performance

Speaker: Huong Tran

Source : Slides

2AP - Db2 12 for zos and Asynchronous Systems Managed CF Structure Duplexing Update

Speaker: Mark Rader

Source : Slides

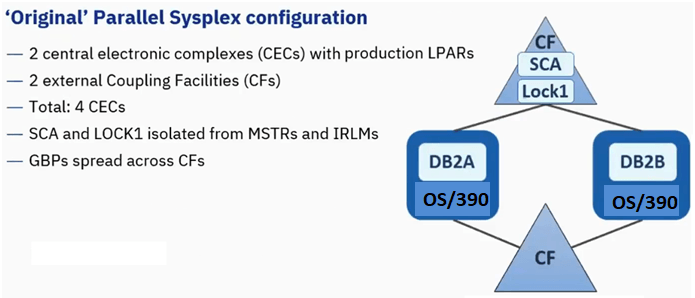

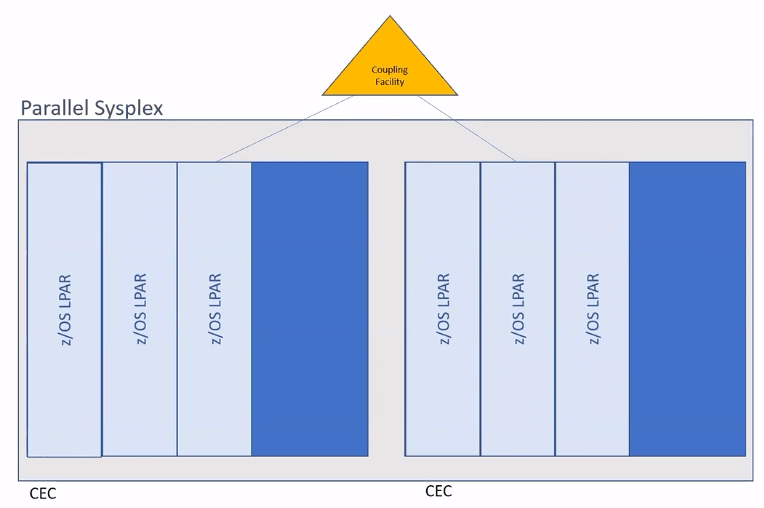

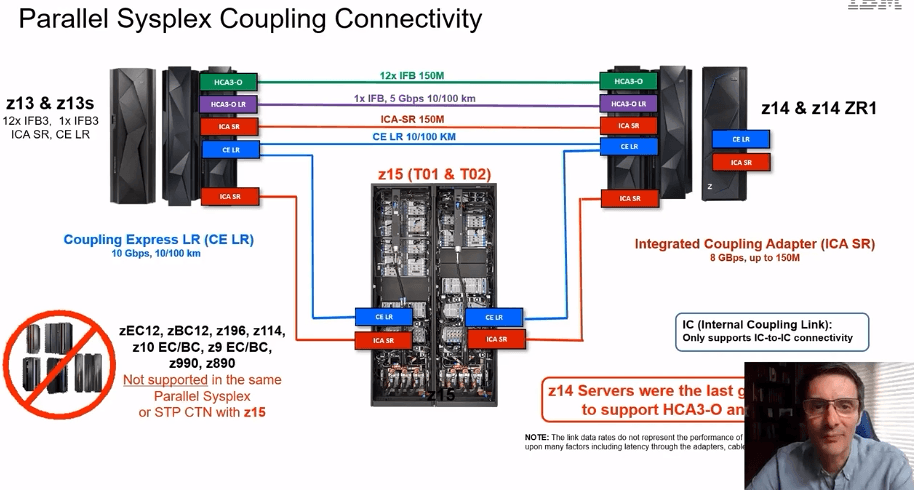

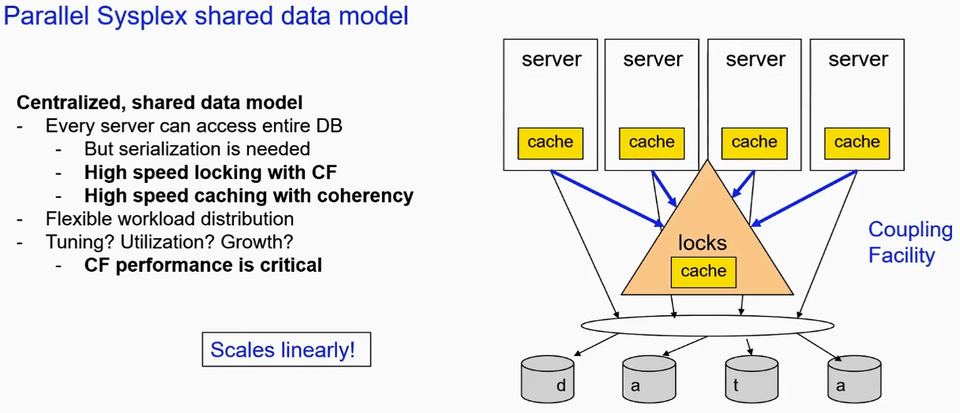

Parallel Sysplex basics for Db2 z/OS

- In Data Sharing systems, Db2 subsystems are called as Members

- Coupling Facility(CF) structures are used for high-speed sharing of locks, status information and data(tables and indexes)

ssnmIRLMallocates lock structure: dsngrpnm_LOCK1 (LOCK1)ssnmMSTRallocates shared communication area: dsngrpnm_SCA(SCA)ssnmDBM1allocates group buffer pool(GBP) for each local BP with shared data- dsngrpnm_GBPn(GBP0, GBP1,... GBP8K0,... GBP16K0,... GBP32K,...)

Below structures are required by Db2,

- LOCK1 and SCA, if Db2 cannot allocate them, Db2 will fail.

- All IRLMs required to rebuild LOCK1

- All MSTRs required to rebuild SCA

Original Parallel Sysplex Configuration

This was designed in mid-90s.

GBPs is spread across two coupling facility. Idea is some large ones in one coupling facility and smallers ones are in another. Its done to balance memory and CPU

Failure to any single CEC(of total 4) tolerated

- If we loose CEC having CF that has SCA & LOCK1, 2 Db2s(IRLM and MSTR) will be used to rebuild structures in other CF by connectors

- If we loose OS/390 or Db2 member, then we have to restart Db2 member to release retained locks.

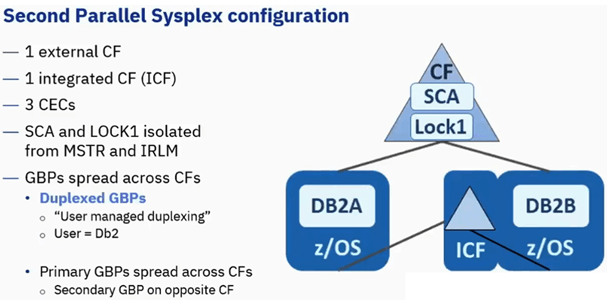

Second Parallel Sysplex Configuration

In this IBM introduced Integrated CF(ICF). Here LPAR having one of the CF is put on a system where there is already a z/OS and Db2.

Around the same time Duplexed GBPs were introduced as well. Here Db2 is writing to XES API to manage how the GBPs allocated and where primary is based on overall load.

Here as long as we have SCA and LOCK1 on external CF system, we can tolerate failure on any single system.

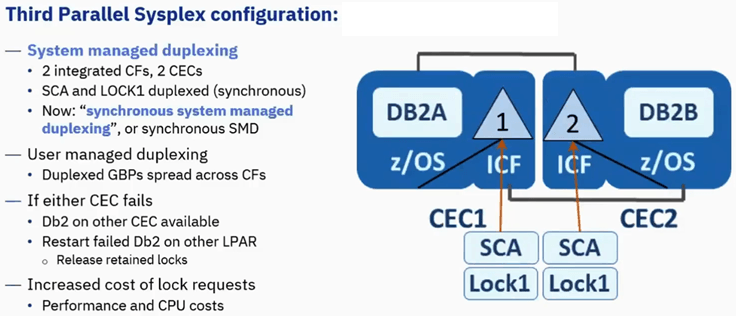

Third Parallel Sysplex Configuration

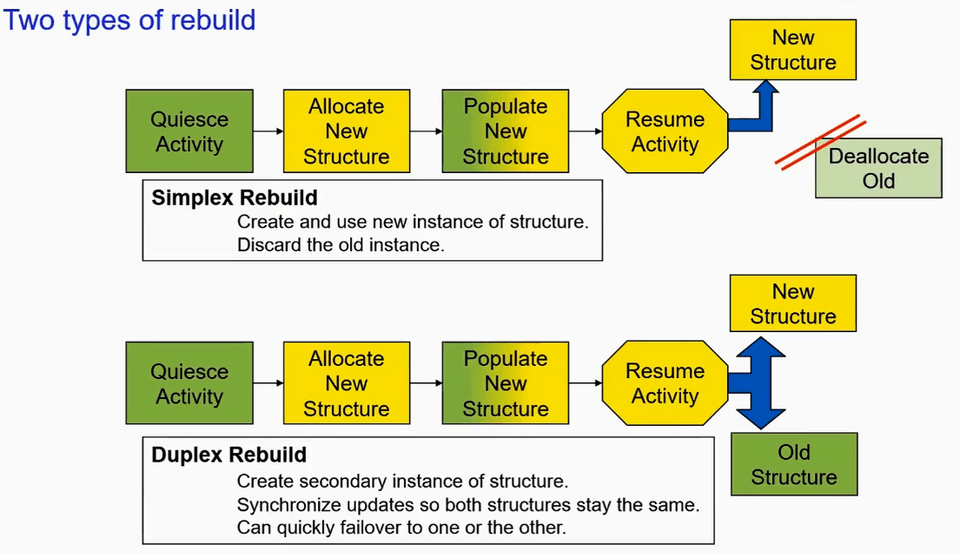

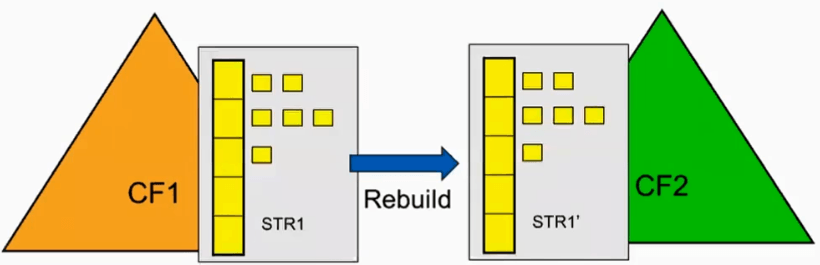

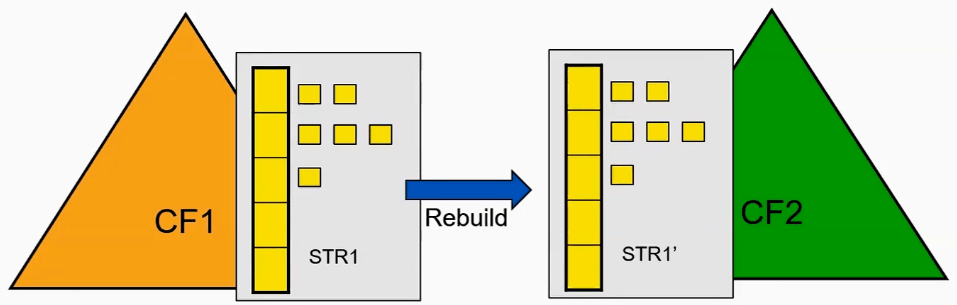

In System Managed Duplexing, CF Structure rebuild is a process by which sysplex allocates new instance of CF structure, populates the structure with data, and proceeds to use teh new structure instance.

Simplex rebuild - Discards the old instance, uses the new.

Duplex rebuild - Uses both instances, sychronnizing updates so the structure contents remain the same.

There are two ways to rebuild,

User managed - Tailored to application, involves significant programming effort, atleast one of the Db2 must be connected to structure to rebuild it.

System managed - General purpose, no development cost as system does the work, it can rebuild the structure from one CF to another CF even if there are no connectors.

System managed is the best

Percentage of requests that get duplexed

- Cache - 20% is typical [not Db2 GBPs]

- List - 100% - Db2 SCA

- Lock - 100% - Db2 Lock1

Duplexed request increases cost of z/OS CPU(3x to 4x, CF CPU(4x to 5x) and CF Link(6x to 8x).

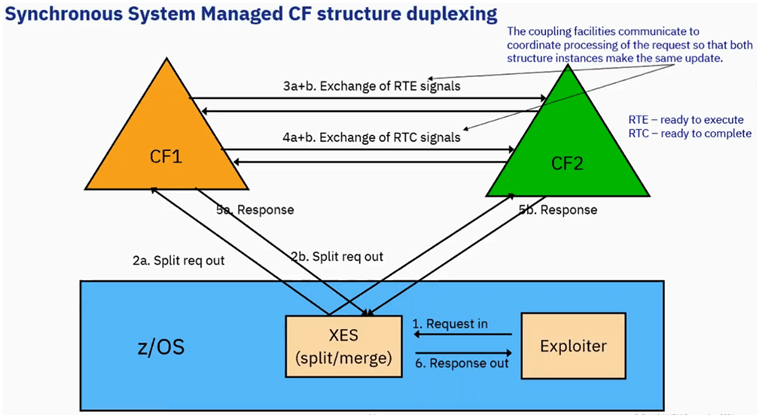

Synchronous System Managed CF structure duplexing

Here Exploiter is IRLM,

- IRLM makes a request

- XES takes and splits the request and send to two CF structures.

- Both CFs exchange Ready To Execute(RTE) signals.

- Then both CFs exchange Ready to Complete(RTC) signals

The coupling facilities communicate to coordinate processing of the request so that both structure instances make the same update. Its Duplexed.

After both agree(3,4), the response comes to XES. Now XES knows that request gone in boths the CFs and in sync in both the places.

XES responds back to IRLM(Exploiter) and its passes to application.

Note: Synchronous cannot continue processing until both instances of the lock structure are updated.

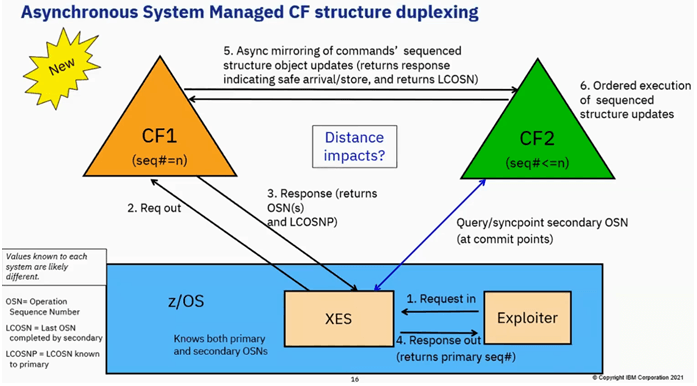

Asynchronous System Managed CF structure duplexing

This is from Db2 12,

IRLM makes the request.

XES takes that request and sends it to the primary structure

Primary Structure(CF1) add a sequence number(OSN and LCOSNP) on the request and responds back.

XES keeps track of primary and secondary OSNs. Its responds back to IRLM with Primary sequence number.

- (ASYNC) : Sends commands sequenced structure object updates to secondary CF2 and it responds back indicating safe arrival/store and returns LCOSN

- Secondary will execute the commands in the same order it was executed in the primary.

IRLM responds back to application

Every often XES queries secondary to know the status by checking Secondary OSN.

Note: Db2 will not write teh log output buffer until the corresponding lock information is updated in the secondary structure.

In a parallel sysplex data sharing environment there would be multiple z/OS systems even though each one finding out from Primary whats going on. The values of operation sequence number(LOSN and LCOSNP) will be different across z/OS systems. It keeps track by a Secondary Update Recovery Table(SURT). Failover for async duplex might be a little slower than sync duplex(both CF are identical).

Note: program can continue processing while the secondary instance of the lock structure is updated.

2AR - ISPF Master Class - Edit from A to ZLAST

Speaker: Dan Dirkse

Source : Slides

6AT - zOS Terminology

Speaker: Keith Sisson

System Z - Z stands for Zero down time. It can be refered to as

- CEC - Central Electronics Complex. This is the New fashioned term

- CPC - Central Processor Complex

MIPS - Millions of Instructions Per Second, its the measure of the capcity of the machine. Higher the MIPS, more it will cost to operate the machine.

MSU - Millions of Service Units, its the measure of the theoretical capacity of the machine to operate.

Processors are configured to operate differently.

- General Purpose Processor(GP) : All instructions for the machine are executed.

- zIIP : This mostly runs JAVA Workload. It does not count towards MSUs towards the machine. You do not pay for the code that is executed in zIIP engine. Its to encourage to use JAVA in mainframe and reduce cost.

- Internal Coupling Facility(ICF) :

- IFL : This engine is designed to run zLinux workloads. Similar to zIIP.

Channels/Cards

- Open System Adapter(OSA) : Its a Network card for the mainframe, prior to TCPIP being widely available, mainframes had their propritery own networking protocol(SNA). Modern MF is connected via TCP/IP just like anyother devices.

- Other cards are Crypto and Ficon

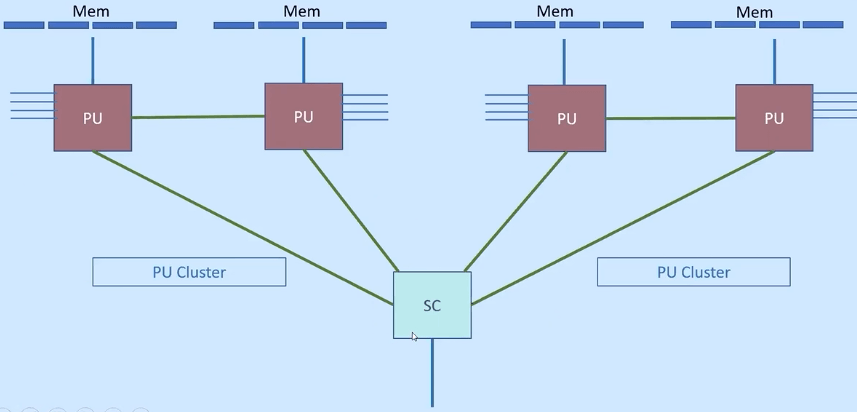

Processors

There are two types of processors that are part of mainframe,

- Processor Unit(PU) Chip : All GP, zIPP, IFL and ICF all types of engines on PU chip.

- System Controller(SC) Chip : PU chips are controlled by SC chips in the drawer. SC chip co-ordinates activities between the PU chips and it also controls communication with other processor drawers in the path.

Newest Chip from IBM is Telum Chip. Its a 7nm chip, it refers to the size of the transistor on the chip. There's 22.5 Billion transistors on the chip, 8 cores, 5Ghz+ Clocks. Thickness of human hair is 80,000nm-100,000nm

Direct Access Storage Device(DASD)

- Each storage device has a six character volser(volume serial)

Virtual Tape

- Originally a tape was used to store data where batch job would be submitted to copy data from disk to tapes.

- Now, we are using virtual tapes which are actually disks behind the box. Luminex Virtual Tape Controller emulates a tape drive and write to disk.

All the tapes and DASD are connected via FICON cables

- Supports hundereds of concurrent 16Gbps fiber optic IO Connections

- I/O link called 'IBM zHyperLink Express' that is designed to support up to 32 ports with link data rate of up to 8 GigaBytes per second.

SysPlex

- Coupling facility is another standalone processor, it could be interal(ICF) or exteral as in below pic.

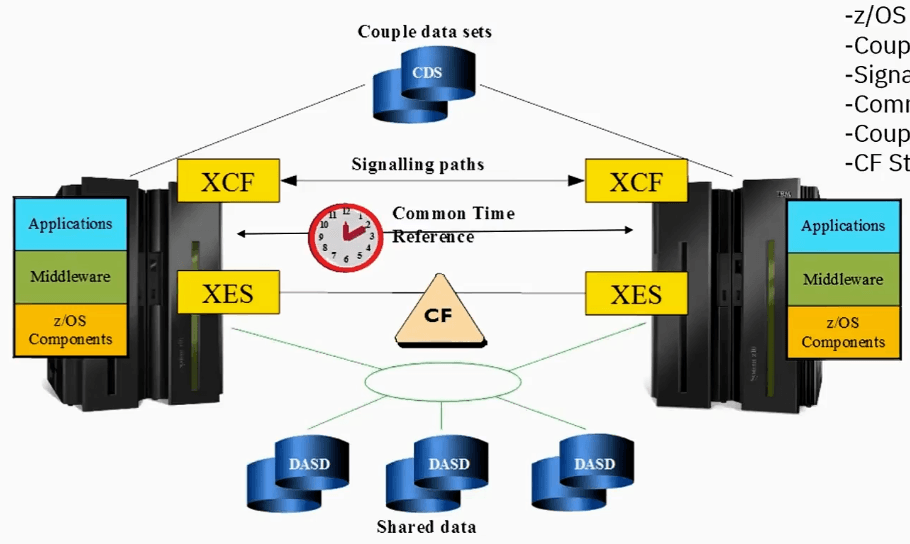

- Connecting two CEC with coupling facility makes this a Parallel Sysplex

- Coupling facility can be used to group two lpars in one machine or separate physical machines. Sysplex is just grouping of logical partitions.

PR/SM is a hardware hypervisor inside of the mainframe. This is a firmware code that runs on mainframe allows logically partition the device. It can be thought of VMWare.

Address spaces

Address spaces are inside an z/OS LPAR. There are different types of address spaces like,

- Address spaces used by system are called System Tasks(MASTER, CATALOG, CONSOLE, ALLOCAS)

- There are address spaces started and controlled by programmers called Started Tasks like VLF, LLA, JES2, TCP/IP, CICS

- Each person logs on to mainframe get their own address spaces.

- Batch jobs

All address spaces are started identical, created by OS and programs are loaded into them and executed.

An address space can be think of as a giant excel spreadsheet and its 16Exabytes thats 1Million terabyte.

When its first created, it looks like an empty spreadsheet then OS loads few things for you below 16MB LINE as thats what original MVS can talk to as it had 24-bit address.

CICS is a subsystem runs in a address space but refered to as a CICS Region. Its sort of a web server.

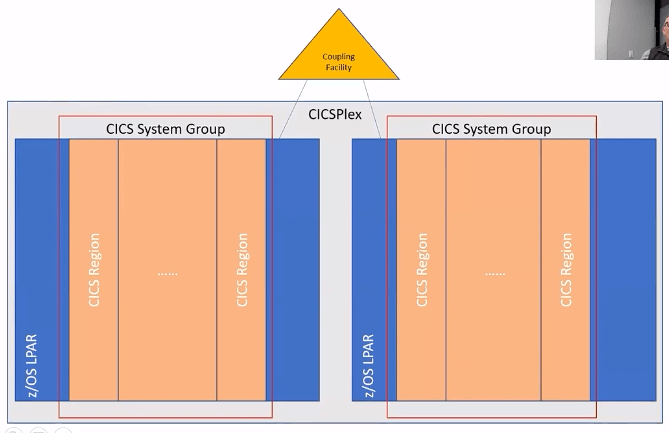

Here two lpars are connected via Couple Facility and bunch of CICS Regions running on different lpars for redundancy. CICS Regions on same lpar are grouped and called as CICS System Group and entirely called as CICS Plex.

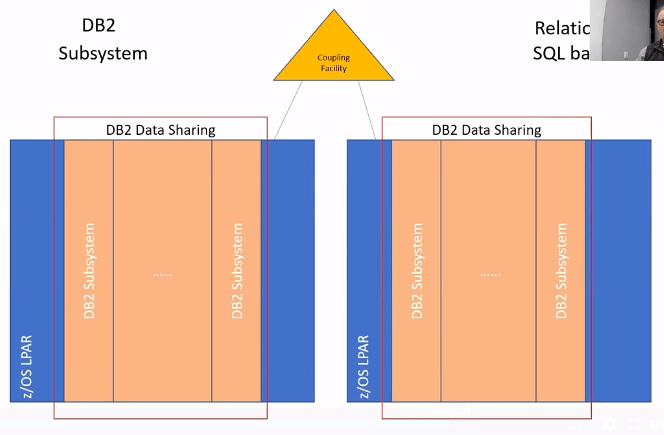

Db2 Datasharing similar to CICS

- APAR is used to describe a problem

- PTF provides the resolution

4AU - Db2 zOS housekeeping best practices

Speaker: Michal Bialecki

Why Housekeeping is important ?

- With SELECT, searched UPDATE - data in order is processed more efficiently ( less GETPAGES, less SYNC IO ) than disordered data, with indirect references to overflow records or pseudo deleted rows/mass deleted pages

- With INSERT/UPDATE, search algorithm for empty row slots find empty spots quicker

- Mitigate concurrency issues - for hot space map pages, deadlocks/timeouts

- Saves DASD Space ( after REORG )

- Getting rid of empty spaces, pseudo deleted and overflow (indirect) records, releases space in tables/indexes and VSAM EXTENTS

With any DML(IUD) processing disorganizes data in tables,

- UPDATE data in place with longer value, that does not fit into original record in data page(overflows)

- DELETE leaves pseudo deleted rows(indexes)

- MASS DELETES - pseudo deleted pages / spacemap

- INSERTS out of order due to locked pages

- INSERTS to end of table partition (eg with MEMBER CLUSTER)

- INSERTS into ALTERed datatypes columns(eg CHAR -> VARCHAR)

Overview - REORG / RUNSTATS / REBIND

REORG TABLESPACE reorders tablespace/partition according to clustering, reclaims fragmented space, materializers pending definition changes

REORG INDEX reorganizes an index space to reclaim fragmented space

RUNSTATS gathers metadata info and info about data characteristics/frequency/cardinality/distribution in tablespace, indexes and partitions. Statistics info quality may degrade as application performs IUD which affects AP choices for

- For dynamic statements during PREPARE / RE-PREPARE

- For static packages being under process of BIND/REBIND/AUTOBIND

BIND/REBIND prepares and preserves access path runtime and stores in Db2 catalog/directory, from where it can be loaded into Db2 and (re)executed.

How we may know what statistics to collect duirng RUNSTATS

With STATSINT zPARM interval in-memory objects statistics are externalized to catalog tables

- SYSIBM.SYSTABLESPACESTATS

- SYSIBM.SYSINDEXSPACESTATS

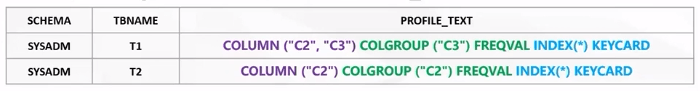

With every BIND/REBIND/PREPARE there is a SYSIBM.SYSSTATFEEDBACK catalog table updated to indicate missing/conflicting statistics.

SYSSTATFEEDBACK table has a column REASON with following values and their respective description and statements for RUNSTATS in Db2 12 are generated in SYSIBM.SYSTABLES_PROFILES.PROFILE_TEXT

| Value | Description |

|---|---|

| BASIC | Basic statistic is missing |

| KEYCARD | KEYCARD statistic is missing |

| LOWCARD | Column has low cardinality which indicates data skew is likely |

| NULLABLE | Distribution statistics not available for a nullable column |

| IXPROBE | Index probing was used to improve very low filter factor |

| DEFAULT | Predicate references a value that is probably a default value |

| RANGEPRD | Histogram statistics not available for a range predicate |

| PARALLEL | Parallism could be improved by uniform partitioning of key ranges |

| CONFLICT | Another statistic conflicts with this statistic |

| COMPFFIX | Multi-column cardinality statistics are needed for an index composed filter factor |

Does this solve all the AP problems, NO. Think of this as a suggestion.

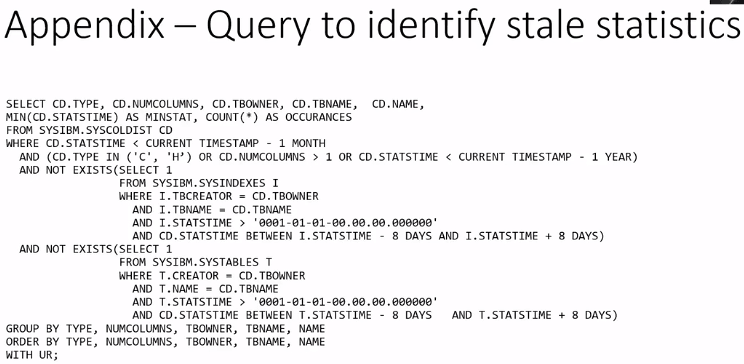

Use DSNACCOX formulas to get candidate objects for REORG/RUNSTATS

- It also includes detection of RESTRICTED TS/IX states to be put in REORG object list of REORG.

When data is deleted, in the index its pseudo deleted but data still exists there, so for any other queries, that data will be read and based on the flag it will know its invalid and it will skip it. Point is, there is an IO for this.

Index page splits, when leaf page is full, Db2 will create a new page and move some(50%) of the entries in the old page to new page, this is to balance index tree. This technique doesn't work well with sequential inserts as half of the space in old index page is not used.

Pseudo-deleted index entries

- zParm

INDEX_CLEANUP_THREADS=10means there will be 10 system tasks to do clean up automatically, it cleans up pages that are touched by GETPAGE processing and will be limited to quite periods. This reduces the need for REORG INDEX. IFCID 377 tracks cleanup at the index page level.

RUNSTATS is not recommended outside of REORG, use it only with conjuction with REORG table

Review the list of object candidates

Using DSNACCOX formulas build a scoring table

- Evaluate each query predicate, how many rows it generates and choose top ones

- Any deferred tablespace and index REORG needs to be prioritized for the next window(or consider increasing window duration)

Perform trend analysis on triggers for REORG and investigate object tuning

- Increase PCTFREE to reduce index page splits ?

- Define some PCTFREE FOR UPDATE ?

- Can use AUTO(-1) which used RTS to adjust to optimum, but bear in mind that it will take several REORG cycles to learn into it

- Increase SEGSIZE to provide better chance of maintaining clustering ?

- Consider larger index page size to reduce index page splits ?

- You may enchance the automation logic to escalate to a tablespace REORG if a significance number of partitions become a candidate for REORG.

RUN ONLY - REORG IX without STATISTICS. Otherwise it may create time drift between AP stats for table and index

- Do not run RUNSTATS without REORG TABLESPACE

- Do not run RUNSTATS on index, without RUNSTATS on table or on some partition only.

REORG Recommendations

- Avoid transaction timeouts during REORG

- (MAXRO + DRAIN_WAIT) < (IRLMRWT - 5 seconds)

- For large objects, or when troubles to fit into maintenance windows:

- Run REORG with MAXRO DEFER, schedule the REORG outside the REORG window, and ALTER UTILITY REORG MAXRO n inside REORG window

- Can run REORG with FORCE ALL option to try and force the REORG through, but at the risk of transaction failures.

- Use DRAIN ALL - don't use DRAIN WRITERS - you would need to DRAIN twice(once for writers and once for readers), WRITERS and ALL(one time itself it will drain WRITERS and READERS)

- Consider using option DRAIN_ALLPARTS YES

- Helps avoid undetected deadlocks between CLAIMS and DRAINS when running REORG at the PART level when one or more NPIs on table

- zPARM REORG_DROP_PBG_PARTS shall be set to DISABLE, otherwise, if partition is dropped you cant recover to PIT prior to REORG

RUNSTATS Recommendations

Don't use FREQVAL by default

- Do not specify it in your RUNSTATS job unless it was recommended by SYSSTATFEEDBACK or Data Studio

- If you exclude FREQVAL - we will by default collect on the leading column of each index

RUNSTATS INDEX ... FREQVAL NUMCOLS n - n>1 are often not useful

- This statistics only cover frequent values of combination of ALL together columns listed.

- Use ONLY for queries with EQUAL predicates with LITERALS

- Dont use FREQVAL (BOTH or LEAST) use only MOST its valuable to the optimizer to know the highly skewed values.

Don't use HISTOGRAMS by default, they can quickly become stale for ascending columns(especially dates/timestamps)

From V12, INVALIDATECACHE NO is the default -- Running RUNSTATS will not purge Cached Dynamic Statements(DSC). If you want to invalidate DSC use

- INVALIDATECACHE YES

- REPORT NO UPDATE NONE

- RESET ACCESS PATH

Runstats may not be necessary for VOLATILE tables as when Db2 executes queries against volatile tables, Db2 chooses index access whenever possible.

On REBINDs

Db2 provides many features to reduce the introduction of new access paths and providing stability and reliability in performance

- APREUSE - To gain the benefit of new runtime structures - with reduced risk of regression

- APCOMPARE - compare access path wehn you rebind, and either issue WARNing(WARN option) or terminate(ERROR option) if differences detected.

- REBIND EXPLAIN(ONLY) ... or EXPLAIN PACKAGE -- check current access path

- SWITCH - To revert to prior "good" performance. You should have original

New REBIND Behaviour from Db2 12 FL505

- To allow BIND/REBIND to complete without requiring quiesce(exclusive access) of package. Will create a new CURRENT(phase-in) and existing will be the "phase-out" copy

6AU - Mainframe Performance Tuning

Speaker: Mary Solomon

What is Performance Tuning ?

Most systems will respond to increased load with some degree of decreasing performance. A systems ability to accept higher load is called scalability and modifying a system to handle a higher load is synonmous to performance tuning.

For effective performance management, you need to go on to measure resource requiremnet at the workload level. Analyzing your workload helps you to understand the behaviour of your system and how workloads interact with each other.

Understand your system

- Which workloads are placing the greatest burden on the system resources ?

- Measure your online and batch CPU usage pattern

- How do performance baselines compare before and after optimization efforts ?

- Measure CPU usage at System level, LPAR level and workload level

- SMF 70, 72 and 30

WLM Workload distribution

- Dont put all workloads in single bucket

- Each service class is comprised of one or more service class periods. Its at the period level that work is assigned a goal, an importance level and optionally, a period duration of service.

- Distribute the workload

- Try to identify major contributor, review your WLM definition, divide your workload based on your business SLA. If some of batch workloads can run on evening, move it to evening.

Upgrade Compiler

- Using COBOL 6.3 reduces, on average, CPU usage 58% over applications complied with COBOL v4.2 on z15

Review program logic

Capping

Reason you would consider capping techniques,

- Technical Motivation

- Protect/Isolate LPARs against other LPARs

- Protect workloads(set of service classes) against other workloads

- Finanical Motivation

- Limit software cost

- Guaranteed capacity limit for one or more LPARs

- 4h rolling average consumption

- Limit software cost

Memory

Central Storage

- Its good to know the allocated central storage size. Used in Hipersorting, dataspace sorting and memory object sorting.

AFQ(Available Frames Queue)

- When the supply of REAL frames becomes low, z/OS uses page stealing to replenish it based on an LRU mechainsm

- Paging

- High paging rate always indicating the memory constraint

- DFSORT bases it use of these very efficient sorting methods on the amount of central storage it can use without causing excessive paging on the system.

Application Performance

CICS

- SMF 30 - Shows CICS region CPU usage data

- SMF 110

- CICS transaction performance data

- Avoid high no. of transaction abend

- Measure QR and L8 CPU usage

- Measure transaction volume

- Looping Transaction

- Monitor CICS transaction CPU usage and transaction volume

- Transaction Response time

Note 1 : CICS transactions programs mainly run on the QR(quasi reentrant TCB). This is the CICS main TCB. Note 2 : Db2 calls within the transactions normally use L8 TCB.

Db2

- SMF 30 - DIST address space using

- SMF 101, 102 - DDF user and transactions info

- IFCID 196 Timeout report

- IFCID 172 Deadlock report

zIIP

- zIIP is initially designed for Java and now Db2(SP) and Cloud workloads(REST)

- z/os Container Extensions - 90% is zIIP eligible

- IBM z/OS Connect EE is a Java based product so its 99% zIIP eligible

- Data Virtualization Manager for z/OS - Allows to R/W data of relational and non-relation data without having to move, replicate or transform data.

- zIIPs can be used to process network encryption and decryption

- XML Processing

- IBM Watson ML uses zIPP

- Provides Capacity for System Recovery Boost

- z/OSMF is 95% zIIP eligible

Question i had mind,

- How to see zIIP Eligible MIPS and zIIP MIPS usage ?

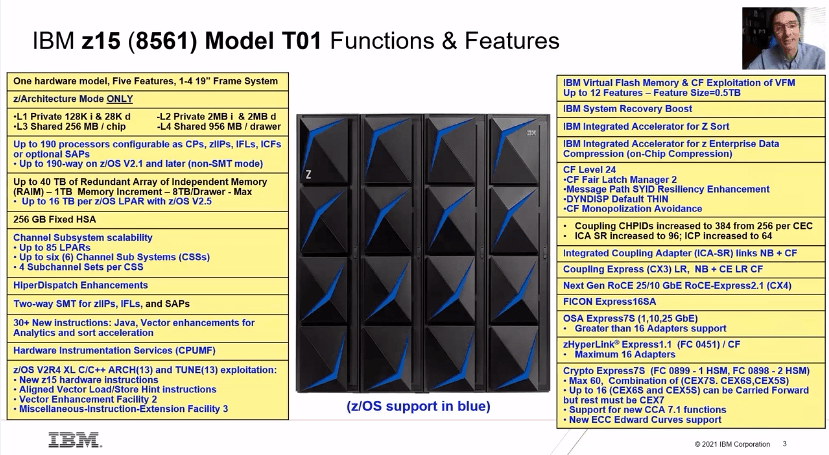

5AV - IBM zOS on z15 Hardware

Speaker: Steve Warren and Marna Walle

Source: Slides

Fix Categories

- Required

- Exploitation

- Recommended

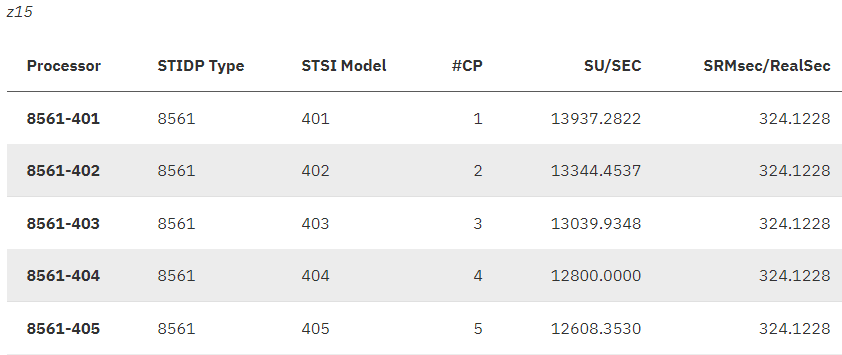

6AV - How long is too long Multi-period Service Classes

Speaker: John Baker

Source: Slides

Measuring Workloads

- In z/OS, System Resources Manager(SRM) tracks each workloads consumption of services.

- SMF 30 and 72 provide insight into service consumption by each category,

- TCB(Task Control Block) aka CPU

- SRB(Service Request Block) also CPU

- I/O derived from EXCP counts

- MSO(Main Storage Occupancy) executing within central storage

SRM Constants

- SRM constants allow service to be normalized across various capacities of IBM processors

- Like the LSPR(Larage Systems Performance Reference) aka "MIPS tables", SRM constants provide a relative comparison of hardware capacity

Google "Processor version codes and SRM constants" to get below table

In the above pic, if you notice, the SU/SEC for 8561-401 and 8561-405 there are some differences thats due to "MP Effect", multi-processing overhead associated with increasing the number of cores in a given machine.

Example a job that consumers 1 million SU in z15 will take around 10 CPU seconds. If run on a 8561-401 that only produces 13937.2822(SU/SEC) will take 70 CPU seconds.

Multi-period Service Classes

- Workloads are grouped into Service Classes

- WLM doesn't manage Service classes, it manages Service Class periods. Each service class has atleast one period and when more than one period is defined, each period is like a separate service class with its own goal.

- Each period has its own goal and importance

- Finally period must specify a duration

- Duration is total weighted service units

- When a workload exceeds the duration of the service class period, the workload 'moves' to the next period. Do understand, goals reduce in successive periods.

- Well behaved transactions(batch or online) with lower CPU consumption, receive preferential treatment

Example

Hardware (z14 3906-710) - Each core is rated at 75,117 SU/SEC

Period 1

- 90% complete in .05 seconds (50 milliseconds)

- Importance 2

- Duration 1000 (SU, Take a look coeffient, if its 10, it means you need to consume 1000/10=100 raw service unit -- Total weighted)

- 1000su / 75117 = 0.01(10ms). We can see hardware can produce 1000 service units in 10ms, our goal is only 50ms. This gives basic assurance hardware can handle meet the goal.

- 75117 * 0.05 = 3756 SU

Period 2

- Velocity 40

- Imporantance 3

- Duration 2000000 (2M SU)

- 2,000,000 / 75117 = 26 seconds

- Transaction can accumulate approximately 26 seconds of CPU time before moving to the next period.

Period 3

- Discretionary

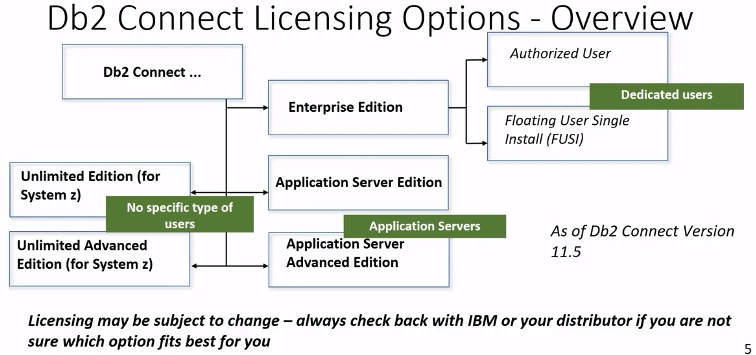

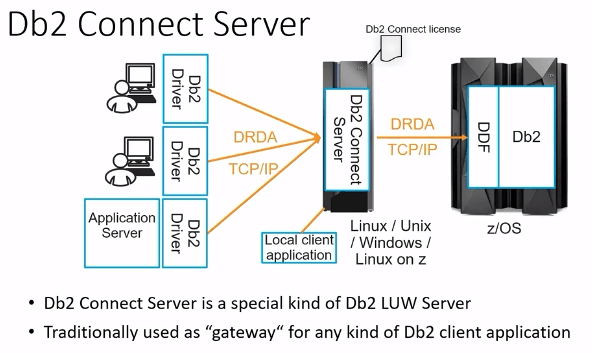

2AY - Db2 Clients, Connect, gateways - what do I really need

Speaker: Christoph Theisen

Source: Slides

What is Db2 Connect

Its a synonym for Db2 Clients or every type of remote connectivity.

IBM is asking to move away from Db2 Connect Server and use direct connectivity.

Db2 Connect License

There are 3 types of license

- Enterprise Edition -- Medium size, used for named users or group of named users.

- Application Server Edition -- Its mainly used in unknown and many number of end users. WebServer is running Db2 Client application connecting to mainframe. Price depends on the capacity of Application Server.

- Unlimited Edition(for System z) -- Most expensive one

License

- Required for Db2 Data Server Clients, Data Server Drivers, Db2 Connect Server to Db2 for z/OS

- Required if connect comes from Db2 LUW to Db2 z/OS

- Not Required for Db2 z/OS to Db2 z/OS

- Not Required for access Db2 z/OS via REST

- IBM Data Studio uses restricted licenses(there is license in the installation path which comes with the product).

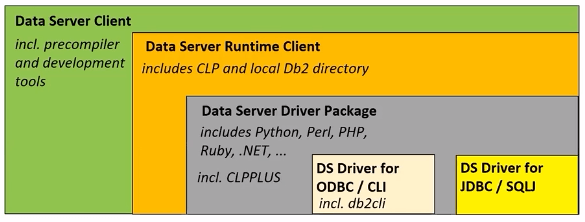

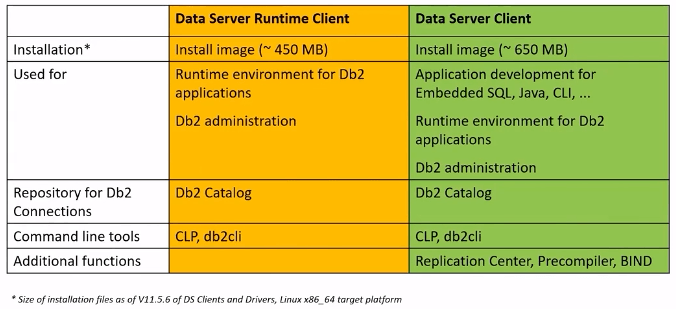

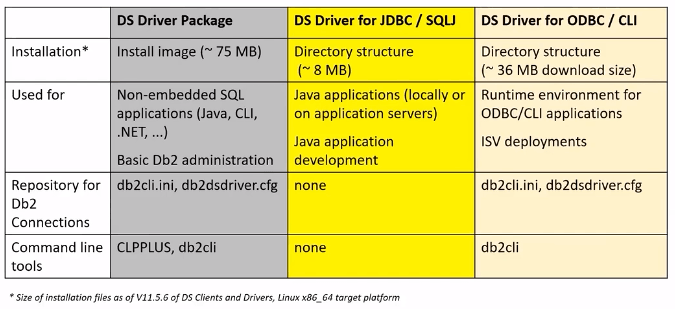

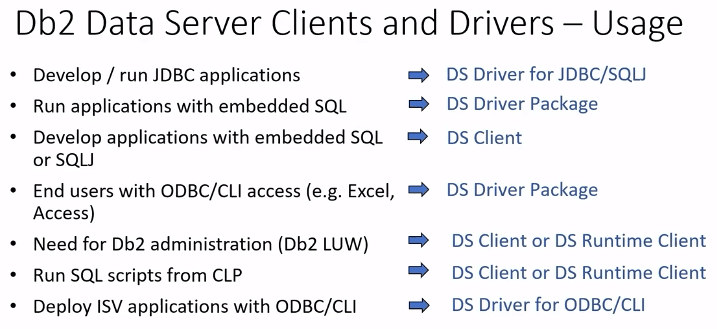

Db2 Data Server Clients and Drivers

- Data Server Client : This is the biggest footprint. This includes Replication Center, Precompiler and BIND.

- DS Driver for ODBC/CLI : This helps you to catalog database connections to remote databases

- DS Driver for JDBC/SQLJ : This is the smallest footprint

Db2 Connect Server aka Gateway

- Point of Control

- Ability to stop server (or) cut connections of any application that is misbehaving

- Reduce number connections

- Used for connection concentration

- Sysplex workload balancing

- Has ability to distribute workload

Is Db2 Connect Server required ? Check the slides from 18-21

2BB - 2021 Edition - Db2 for zOS Development - SWAT Tales

Speaker: Anthony Ciabattoni

Recovery Asset Infrastructure

- Wrapping active log datases too freqently ( < 6hrs )

- Writing archive logs and ICs directly to tape or VTS

- This will result in serialization for multi-object recovery operations

- Even so VTS is backed by DASD but it is still recognized as tape device by the operating system

- Additional storage pools are not pre-allocated to copy VTS Objects to DASD prior to recovery.

- Retention period for recovery assets are not consistent. e.g:archive logs, imagecopies

- LOG NO tablespaces have two imagecopies

...

6BB - zOS program fetch, how programs are found for execution

Speaker: Keith Sisson

Executing a program

- Get the program that you want to run

- Load the program into your address space

- Execute the program

Address space

- Address space is actually a virtual address space.

- In a 64-bit address space, 16Exabytes is actually not there but what z/OS does is in the background, it swaps code in-and-out seamlessly behind the scene. To give you an impression, the entire code is loaded.

Just imagine the bottom has 64 zeros and top has 64 ones.

Fetch Order

- Job Pack Area (JPA)

EXEC PGM=EXCEL. If the copy of the program is already loaded in the address space it will be used otherwise it will search for new copy.

- TASKLIB

- A Program can allocate one or more datasets to a TASKLIB concatenation with an assembler macro by specifying the TASKLIB option. Modules can then be loaded from a DD concatenation

- STEPLIB or JOBLIB

- These are specific DD names that can be used to allocate data sets to be searched ahead of the default system search order for the programs.

- When STEPLIB and JOBLIB both are mentioned in a JCL, STEPLIB takes precedence over JOBLIB and load is searched there.

- LPA (Link Pack Area)

- These are loaded in addres space during IPL time and they are persistent.

- Libraries in the LINKLIST

- If a module is not found in the other above locations, the LINKLIST is then searched. The LINKLIST is a 'list' of load module libraries that reside on disk, that the system is to search.

More on catalogs...

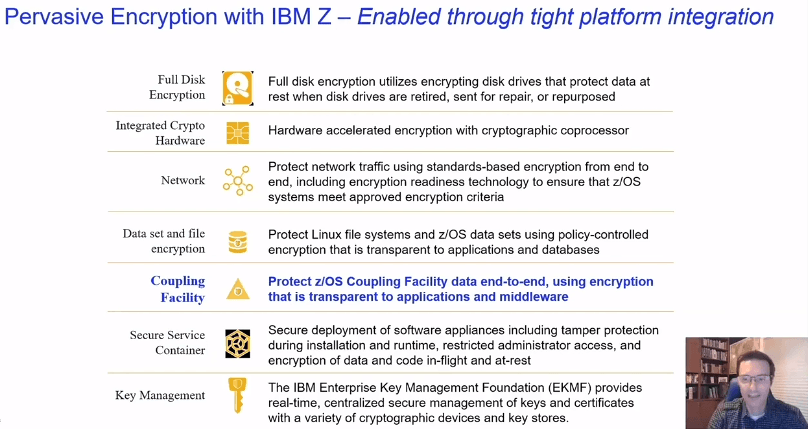

5BC - Protecting data through encryption of Coupling Facility Structures

Speaker: Steve Warren Source: Slides

With z14 and z15, we can encrypt all of the application data without making any application changes and no impact to SLAs

Steps

- Define Policy -- Edit CFRM policy input, specifying ENCRYPT(YES)

- Create Keys -- Run policy utility and store keys in CFRM CDS

- Activate Policy -- Start the new policy by command

SETXCF START - Encrypt Data -- Rebuild structures by

SETXCF START,REALLOCATE

More details on above steps....

7BE - Automating DB2 Schema Change - A Practical Guide

Speaker: Julian Stuhler Source: Slides

Whole presentation was very precise, if i had to take notes, it would be entire presentation. :D

4BI - Db2 for z/OS Continous Delivery - Five Years Later

Speaker: Chris Crone

Saving best for last

Four new levels

Maintenance level(ML)

- Also known as code level

- Contains fixes & inactive new functions

Catalog level(CL)

- Db2 Catalog changes that are needed for FLs

Function level(FL)

- Introduces new Db2 features and functionality

APPLCOMPAT level(AC)

- Determines SQL level of applications

- Activates new SQL syntax

- Freezes SQL syntax even if FL is later moved back to earlier level

Initial assumptions were New MLs & FLs expected every 3-4 months

There is a new catalog table sysibm.syslevelupdates to keep track of FL changes.

Db2 Continous Delivery

APPLCOMPAT(V12R1M501) and above

Packages for IBM Data Server Drivers (aka – the nullid packages)

In Db2 12, APAR PH08482 simplifies the process of activating new function levels without impacting existing JDBC, CLI, and .net applications, and it possibly reduces the coordination effort required between Db2 administrators and application programmers, for activating new application compatibility (APPLCOMPAT) levels. With this APAR applied, Db2 12 assumes that the IBM Data Server Drivers at the following levels or higher fully support all new SQL capabilities:

- IBM Data Server Driver for JDBC and SQLJ Versions 3.72 and 4.22, or later

- IBM Data Server Driver for ODBC and CLI Version 11.1 Modification 2 Fix Pack 1 or later

Specifically, this APAR makes the clientApplcompat property optional for applications that use the IBM Data Server Drivers (different spellings and capitalization are used in various drivers; however, for readability this post uses only the correct spelling for Java drivers.) Previously, Db2 12 required such applications to specify the clientApplcompat property if the 'NULLID' packages at the server were bound with APPLCOMPAT(V12R1M501) or higher. Otherwise, the connection failed with SQLCODE -30025, possibly with message DSNL076I.

LISTAGG

APPLCOMPAT(V12R1M502)

- Explicit Casting to GRAPHIC/VARGRAPHIC

- Database Controlled Pervasive Encryption

FL503

Db2 AI for z/OS Support,

- -START ML (Db2)

- -STOP ML (Db2)

- -DISPLAY ML (Db2)

Temporal Auditing

REPLICATION_OVERRIDE

FL504

- Z14 (and above) Huffman Compression

- Prevent Creation of Deprecated Objects. Packages bound with APPLCOMPAT(V12R1M504) cannot CREATE

- SYNONYMS

- SEGMENTED (non-UTS) tablespaces

- Partitioned (non-UTS) tablespaces

- Hash-Organized Tables

This only affects the creation of new objects from packages bound APPLCOMPAT(V12R1M504) and above.

- Use – “SET CURRENT APPLICATION COMPATIBILITY = ‘V12R1M503’” to allow creation of these objects, if necessary, on application/tooling bound at higher levels

- Can be set in “Profile Tables”

- IDAA Built-in Functions(BIF) Passthrough

- New Syntax Alternative

FL505/APPLCOMPAT(V12R1M505)

FL505

- Improved Hybrid Transactional Analytical Processing

- Ability to have true ISO(CS) CURRENT DATA(NO) query processing on IDAA

- Rebind Phase-in for packages being used for execution

- RUNSTATS automatic page sampling

- Incompatible change if STATPGSAMP=DEFAULT (with activation of FL – essentially STATPGSAMP=YES)

- Improved Hybrid Transactional Analytical Processing

APPLCOMPAT(V12R1M505)

- Index support for DECFLOAT

- System-period temporal tables and archive-enabled tables can be referenced in WHEN clauses of both basic and advanced triggers

Functions ENCRYPT_DATAKEY, DECRYPT_DATAKEY

FL506

- DROP Behavior is controlled by APPLCOMPAT(V12R1M506). Consistent support for dropping implicitly and explicitly created tablespaces

- If you drop a base table that resides in an explicitly created universal table space, Db2 implicitly drops the table space along with the table instead of returning an error in SQLCODE -669.

- If you drop a system-period temporal table or an archive- enabled table, Db2 also drops the associated history table or archive table. If the history table or archive table resides in an explicitly created universal table space, Db2 implicitly drops the table space along with the table instead of returning an error in SQLCODE -669.

- If you drop an auxiliary table that resides in an explicitly created LOB table space, Db2 implicitly drops the table space along with the table. Previously, the LOB table space remained as an empty table space. An auxiliary table is dropped when you take one of the following actions:table1+ Issue the DROP TABLE statement on the auxiliaryrunning the REORG utility on a partition-by-growth table space1+ Drop the associated base table2+ Drop an associated LOB column3+ Remove an associated trailing empty partition when

FL507

Deletion of old statistics when using profiles

- When you run the RUNSTATS, REORG, or LOAD with the USE PROFILE option.

- Db2 collects only those statistics that are included in the specified profile. Beginning in function level 507, Db2 now also deletes existing statistics that are not part of the profile. All frequency, key cardinality, and histogram statistics that are not included in the profile are deleted from the catalog for the target object. This new behavior provides a way for you to remove stale distribution statistics without impacting concurrently running dynamic SQL.

- When you run the RUNSTATS, REORG, or LOAD with the USE PROFILE option.

NUMLKUS and NUMLKTS Global Variables

IDAA BIF Passthrough

CREATE or REPLACE for stored procedures

FL08

- FTB Support for Non-Unique Indexes

- Multi-Table Segmented to PBG

FL09

- Tamper Proof Audit Policies

- High Availability Accelerator Tables

- Huffman Compression at Object Level

- UPDATE and DELETE for Temporal RI

FL10

Activation of FL 510 activates all previous FLs and prepares the system for migration to Db2 Apollo

4BJ - Parallel Sysplex Basics

Speaker: Steve Warren Source: Slides

What is Sysplex ?

A group of coupled yet distinct clustered instances of z/OS images that cooperate to provide a virtual single z/OS instance view to applications with the goal for high availability and capacity by providing:

- Messaging between the various images in the sysplex

- Data sharing between all the images.

- Coupling Facility is intermediary between servers and data

- Server can make one simple call to CF to get serialization to make updates.

Sysplex is two or more systems(limited to 32 systems) working in a cluster and these images could be in same CPC or different box.

Sysplex is two or more systems(limited to 32 systems) working in a cluster and these images could be in same CPC or different box.

- XCF Manages communication between two or more systems that communication goes over something called Signaling Paths.

- XCF uses couple data sets(CDS) to manage configuration and state information related to sysplex.

- XES is another component of the operating system that communicates with the common storage of the sysplex, coupling facility.

- Coupling facility is another operating system(CFCC, coupling facility control code) running in another LPAR in a CPC. Using XES, application are allowed to write to these common shared storage.

There are two types of Coupling Facility

- CF : External Coupling Facility or Standalone Coupling Facility, what this means is, that particular CPC, mainframe box only contains CF image or images. Expensive but good technique.

- ICF : Internal Coupling Facility, here CF is running in a LPAR in a CPC and there are also other LPARs running other operating systems.

Node Management

- Sympathy sickness ( system becomes unresponsive )

- Detecting unresponsive system

- Status information is held in CDS

- Every few seconds each system is going read Time of Day(TOD) for each peer system. If TOD, Signaling paths, status time of day exceed Failure detection interval(FDI) then conclude subject system is unresponsive

- Sysplex partitioning is the act of removing a particular z/OS image out of the sysplex.

- Isolation techniques

More on CDS ( Primary and Alternate )

More on XCF

More on XCF Signaling Paths

More on Coupling Facility (List, Lock and Cache Structures)

CF Structure Rebuild

Rebuild is the process by which the sysplex allocates a new instance of a given CF structure, populates that structure with data, and proceeds to use the new structure instancce.

There are two types of rebuild,

- Simplex - Create and use new instance of structure. Discard the old instance

- Duplex

- Create secondary instance of structure

- Synchronize updates so both structures stay the same

- Can quickly failover to one or the other